Leveraging Threat Intel for Event Enrichment In Security Onion

Introduction

In this article, we’ll walk through how we can leverage Filebeat modules and the enrichment functionality built into the Elastic Stack to facilitate enrichment of log data to include threat intelligence from external sources in Security Onion. By adding pertinent information from threat intel events, we can more easily determine if communication happening across our network might be related to a particular malware campaign or threat actor. This information can be utilized through searching and stacking in Hunt, or the use of detection rules with Playbook.

Prerequisites

- Security Onion 2.3.110 or newer installed as a standalone or distributed deployment

- MISP server installed and running, reachable from the Security Onion manager or standalone node

Collect Threat Intel

The first step we need to take is actually collecting threat intelligence data from our favorite source. The currently supported filesets for the Filebeat Threat Intel module include:

- Abuse URL (abuse.ch)

- Abuse Malware (abuse.ch)

- MISP

- Malware Bazaar

- OTX (Alienvault)

- Anomali Limo

- Anomali ThreatStream

- ThreatQuotient

This walkthrough will demonstrate the usage of TI from MISP, although the setup of other filesets should be similar.

To learn more about setting up MISP and automation, see:

https://www.misp-project.org/download/

https://www.circl.lu/doc/misp/automation/

Module Configuration

To start collecting TI data, we need to supply Security Onion with the necessary configuration.

Security Onion uses pillar files for SaltStack to configure the system appropriately. These pillar files abstract application-specific configuration into a central structure that ultimately provides for easier customization and management of the Security Onion grid.

We can use the following code within a pillar file to configure the MISP fileset:

filebeat:

third_party_filebeat:

modules:

threatintel:

misp:

enabled: true

var.input: httpjson

var.url: https://$MISP/events/restSearch

var.api_token: $MISPAPITOKEN

var.interval: 3600

var.first_interval: 24h

var.ssl.verification_mode: none (adjust/remove setting in Prod)

The above configuration will pull all TI data from the last 24 hours during its first run, then run hourly after that. We can also apply additional filters if we wish to only pull indicators of certain types, or for a particular threat level:

https://www.elastic.co/guide/en/beats/filebeat/current/filebeat-module-threatintel.html

$MISPrefers to the MISP server from which you will be gathering TI data$MISPAPITOKENrefers to the API key for MISP$MANAGERrefers to the name of your Security Onion manager node, or standalone node if not using a distributed deployment)

We’ll need to add this to the bottom of the following file, then save it: /opt/so/saltstack/local/pillar/minions/$MANAGER.sls

In the following example, we’ve adjusted the var.interval value to speed testing along:

Next, we’ll restart Filebeat with so-filebeat-restart.

Filebeat will pick up the changes from the pillar file and enable the MISP fileset input for the Threat Intel module, pulling TI data, and ultimately inserting it into Elasticsearch.

Once we’ve successfully gathered threat intelligence data, we should see it within an appropriate Elasticsearch index, like so-threatintel-$DATE.

We can check this by querying in Hunt for event.module:threatintel:

…or from the Security Onion CLI:

so-elasticsearch-query _cat/indices | grep threat

To view the event data from the CLI, we can run something like:

so-elasticsearch-query so-threatintel-2022.03.23/_search?q=* | jq .hits.hits[0]._source.threatintel`

If we don’t see any data, we can check the Filebeat log for clues in /opt/so/log/filebeat/filebeat.log.

Create an Enrich Policy

After we’ve confirmed we have threat intelligence data in ES, the next step is to create an enrich policy to determine what fields are to be added to an event when an indicator is matched with a field value from an incoming document.

The following is an example of an enrich policy:

{

"match": {

"indices": "so-threatintel-*",

"match_field": "threatintel.indicator.ip",

"enrich_fields": [

"threatintel.indicator.provider",

"threatintel.misp"

]

}

}

The above policy will apply to indices including the pattern so-threatintel-*.

This policy informs Elasticsearch that for documents that include a field value (where specified to use the enrich processor) that matches the value for threatinel.indicator.ip in the so-threatintel index, it should add the fields defined in the enrich_fields definition (threatintel.misp.threat_level_id, threatintel.misp.info, threatintel.misp.id) to the incoming document.

To create the enrich policy, we’ll run the following command (on the manager node, or standalone):

sudo so-elasticsearch-query _enrich/policy/ti-enrich-policy -d '{"match": {"indices": "so-threatintel-*","match_field": "threatintel.indicator.ip","enrich_fields": ["threatintel.misp.threat_level_id", "threatintel.misp.info", "threatintel.misp.id"]}}' -XPUT

Once we’ve run the command, we should receive confirmation of successful creation:

If we made a mistake, we could also delete the policy before applying again, by running the following command:

sudo so-elasticsearch-query _enrich/policy/ti-enrich-policy -XDELETE

Execute the Enrich Policy

After creating the enrich policy, we’ll need to execute the policy so that an enrich index is created, based on the data from the source index. This enrich index will contain the field used for matching purposes, as well as the data that is to be appended to incoming documents that match the field:

Ex: threatintel.indicator.ip:

To execute the enrich policy we just created, we’ll run the following command:

sudo so-elasticsearch-query _enrich/policy/ti-enrich-policy/_execute -XPUT

To check if the enrich index was created, we can run the following command:

sudo so-elasticsearch-query _cat/indices | grep enrich

To view the documents in the enrich index, we can run something like:

sudo so-elasticsearch-query .enrich-ti-enrich-policy-1648000395809/_search?q=* | jq

Here we can see that the enrich index contains the indicator IP, as well as the other field we defined.

Create Enrich Pipeline

Now that we have an enrich policy and index created, all that’s left to do is to reference the enrich policy in an ingest pipeline so that we can match particular fields against indicators from our TI data.

To do this, we can create a dedicated pipeline, or modify an existing pipeline.

In our case, we’ll create a small, dedicated pipeline that will run at the end of the ingestion phase as the final pipeline. This pipeline will attempt to match the source or destination IP address against an indicator IP field defined for matching in the enrich index:

{

"description" : "MISP Enrichment",

"processors" : [

{ "enrich": { "policy_name": "ti-enrich-policy", "target_field": "match", "field": "source.ip", "ignore_failure": true }},

{ "enrich": { "policy_name": "ti-enrich-policy", "target_field": "match", "field": "destination.ip", "ignore_failure": true }}

]

}

Any data defined within the enrich index to be included with the match will be put under the match field in the incoming document.

We’ll paste the above code into /opt/so/saltstack/local/salt/elasticsearch/files/ingest/misp.enrich on the manager node, then run so-elasticsearch-restart on all applicable nodes.

Final Pipeline

To have the pipeline run as the final pipeline (so that it runs after all others) we need to make the following changes:

Dynamic:

sudo so-elasticsearch-query so-zeek-2022.03.23/_settings -d'{"index":{"final_pipeline": "enrich.misp"}}' -XPUT

This configuration takes effect immediately for the so-zeek-2022.03.23 index.

You'll want to do this for all applicable indices (any current open indices that you would like to enrich). You don't necessarily need to do this if you prefer to wait until the next daily index is created, or if you wish to delete the current one.

Static:

Modify/add the index definition in /opt/so/saltstack/local/pillar/global.sls:

elasticsearch:

index_settings:

so-import:

index_template:

template:

settings:

index:

final_pipeline: enrich.misp

number_of_shards: 1

warm: 7

close: 45

delete: 365

This configuration will take effect once a new import index is created. We chose to apply the settings for the so-import index in this example, since we will be testing with so-import-pcap later. Make sure to apply the same type of change to any indices you wish to enrich.

Once this configuration is in place, run so-elasticsearch-restart on the manager and all applicable nodes.

Test Enrichment

In order to test enrichment, we'll need events to match against our threat intel data. In order for events to flow through the data pipeline, we need to be performing live traffic monitoring or host collection, or replay traffic to Security Onion's sniffing interface. Next, we'll walk through creating a PCAP. then we'll replay the PCAP against the sniffing interface to generate events to test enrichment.

Create a PCAP

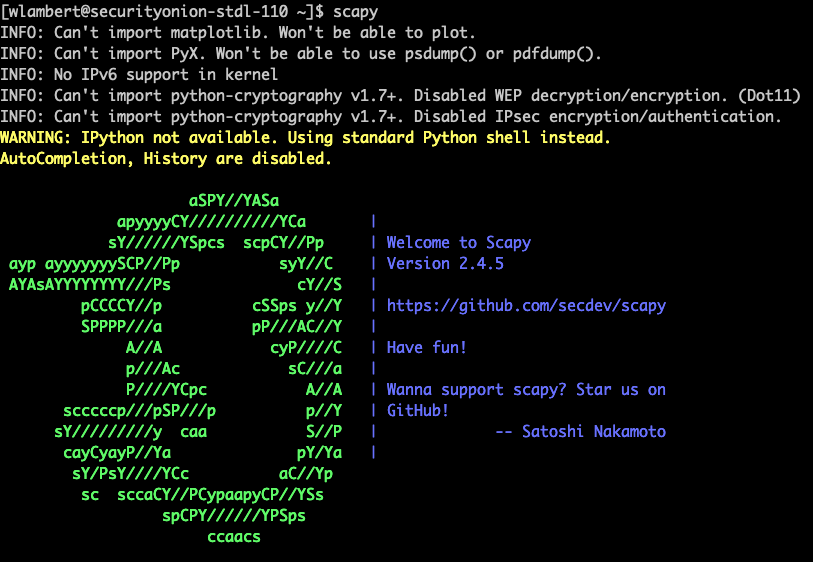

To create a PCAP, we'll leverage Scapy.

For more information on Scapy, see: https://scapy.net

Scapy can be installed like so:

sudo yum install python3-scapy

After installing Scapy, we'll invoke it with the following command to get started:

sudo scapy

Next, we'll create a packet with a destination IP address that matches an attribute defined within a MISP event (which will be pulled into the threat intel index, as well as the enrich index), and a payload of Testing MISP TI Enrichment:

pkt = Ether()/IP(dst="192.168.6.240")/TCP()/"Testing MISP TI Enrichment"

Then, we'll write the PCAP to a local file called so-testing.pcap:

wrpcap("so-testing.pcap", pkt)

After that, Scapy can be exited with exit(), or Ctrl+D.

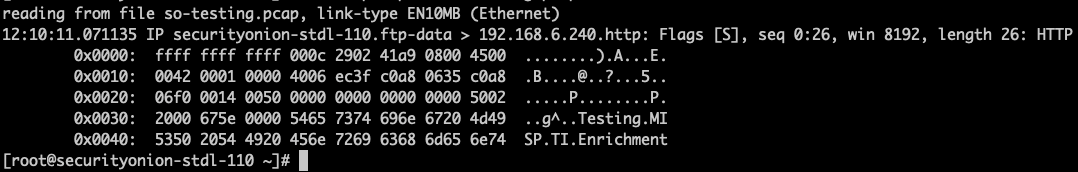

If we want to double-check the contents of our PCAP, we can do the following:

sudo tcpdump -r so-testing.pcap -XX

Import the PCAP

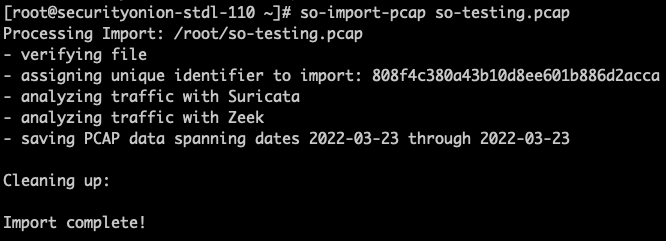

Now that we've created a PCAP for testing, we can import the PCAP and have it analyzed by various services, as well as preserve the original timestamps.

To import the PCAP, we'll use so-import-pcap, supplying it with the name of our PCAP:

sudo so-import-pcap so-testing.pcap

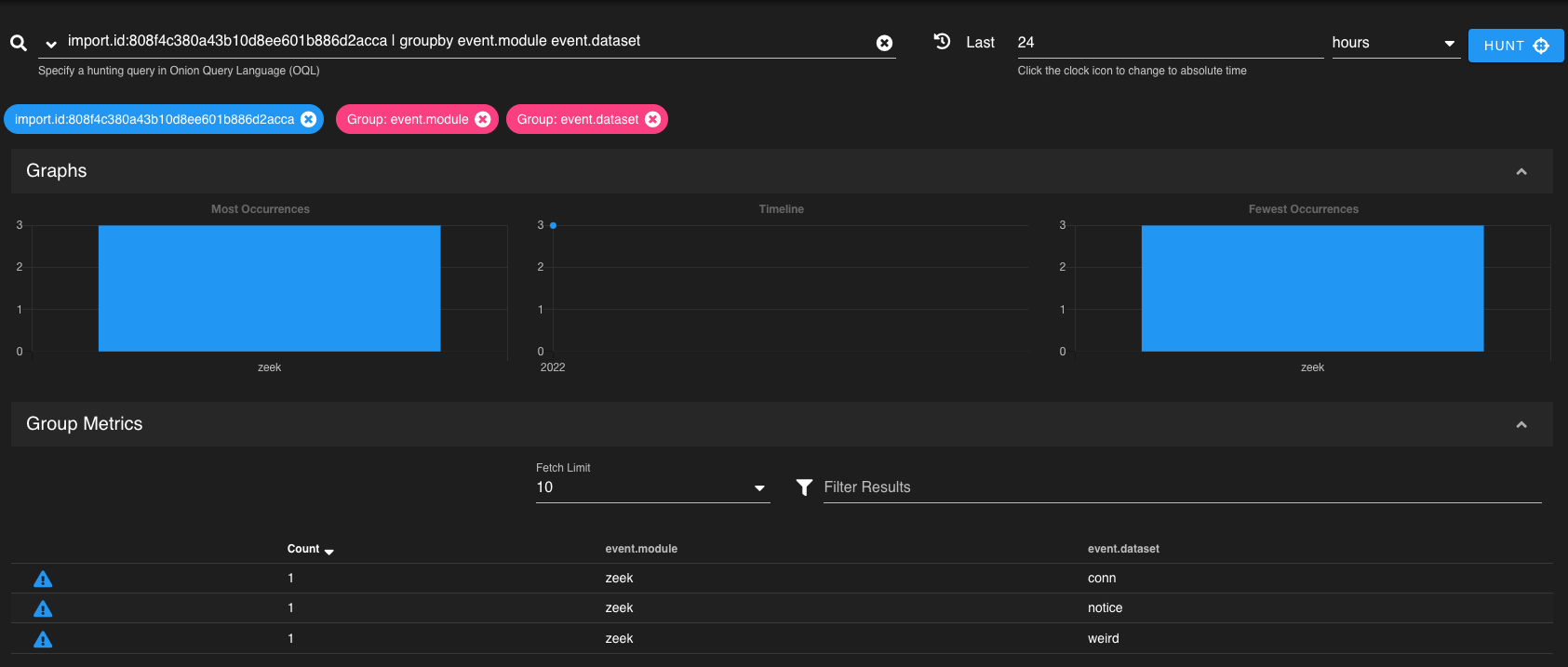

Once complete, we'll be provided with a link we can use to browse to the results of the imported PCAP in Hunt. After pasting the link in our browser, we can see that 3 records (from Zeek) were generated from our PCAP:

Enriched Data

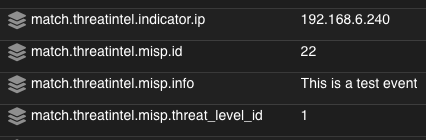

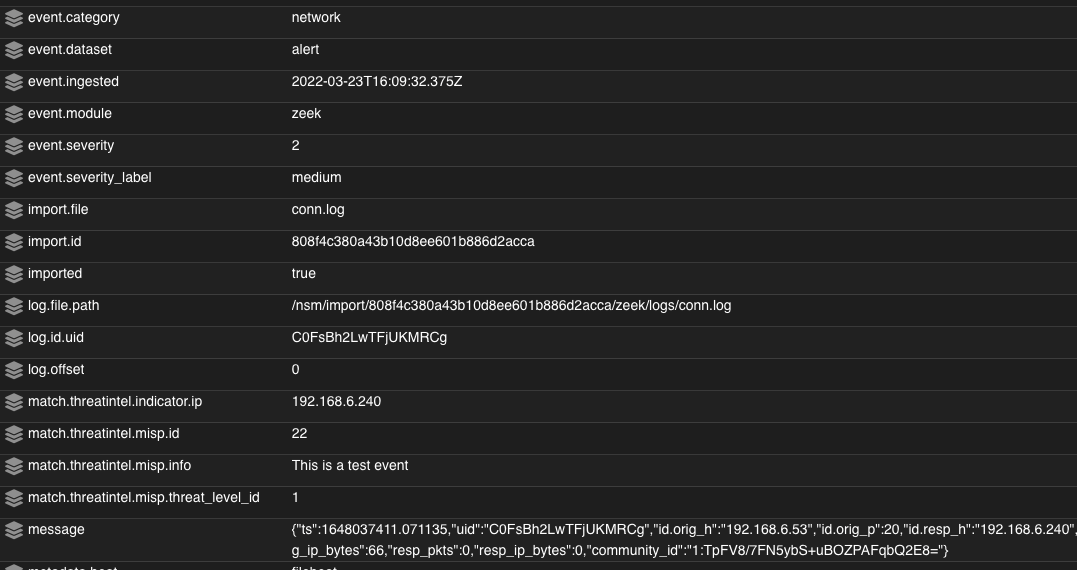

As events come through the pipeline, any events with a source.ip or destination.ip value that matches that of threatintel.indicator.ip from the MISP threat intel data will be enriched with the additional fields we defined. An enriched event will look like the following:

This data can then be queried and stacked within the Hunt interface for quick review.

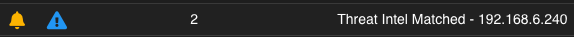

Alerting on Enriched Events

If we want to alert on events that have fields that match data from our threat intel, we can use Playbook to build detection rules, or we can modify our enrich pipeline so that alerts are generated and viewable directly in the Alerts console:

This is achieved by modifying /opt/so/saltstack/local/salt/elasticsearch/files/ingest/enrich.misp to look like this:

{

"description" : "MISP Enrichment",

"processors" : [

{ "enrich": { "policy_name": "ti-enrich-policy", "target_field": "match", "field": "source.ip", "ignore_failure": true }},

{ "enrich": { "policy_name": "ti-enrich-policy", "target_field": "match", "field": "destination.ip", "ignore_failure": true }},

{ "append": {"field": "tags", "value": ["{{ event.dataset }}"]}},

{ "set": { "if": "ctx?.match != null", "field": "event.dataset", "value": "alert", "override": true }},

{ "set": { "if": "ctx?.match != null", "field": "rule.name", "value": "Threat Intel Matched - {{ match.threatintel.indicator.ip }}", "override": true }},

{ "set": { "if": "ctx?.match != null", "field": "event.severity", "value": 2, "override": true }},

{ "set": { "if": "ctx.event?.severity == 2", "field": "event.severity_label", "value": "medium", "override": true }}

]

}

Once modified, restart Elasticsearch with so-elasticsearch-restart on all applicable nodes. That's it! You are now set up to enrich incoming events with threat intel data when the destination.ip or source.ip value matches that of threatintel.misp.indicator.ip.

Manage Enrichment

We can manage enrichment at a scheduled interval by having a cron job execute our enrich policy and generate a new enrich index from the source index. Keep in mind, you’ll want to keep the interval somewhat in line with what you have set for the Filebeat module. If you are only pulling data once an hour at the module level, then it doesn’t make much sense to update the enrich index every 5 minutes. The values set in this article are for testing, and should be adjusted to suit your requirements.

To set up a cron job to manage the enrich index updates, run crontab -e on the manager and add a new line to the cron job containing the instructions to execute the enrich policy (watch out for line wrapping):

# TI Update

*/5 * * * * /usr/sbin/so-elasticsearch-query _enrich/policy/ti-enrich-policy/_execute -XPUT >> /opt/so/log/ti-enrich-policy.log 2>&1

Also, make sure the line is above the following line, as Salt manages all configuration below it:

# Lines below here are managed by Salt, do not edit

The enrich policy will now be executed every 5 minutes (/5). To adjust the timing for this, consult official cron syntax for your desired interval:

Ex. https://crontab.guru/

Conclusion

If you've been following along, congratulations! You should now have a pipeline for event enrichment, or at least a place to start developing more ideas around leveraging threat intel to enrich your events inside of Security Onion. The MISP fileset is just one of several that you can experiment with. Aside from the supported filesets, the Elasticsearch ingest enrich processor can also leverage data from other indices/data sources. The only limit is your imagination!

I'll be developing additional posts discussing other methods of enrichment and integration in the near future. Until then, stay tuned and happy hunting!